IM532 3.0 Applied Time Series Forecasting

MSc in Industrial Mathematics

Introduction to ARIMA models

8 March 2020

Stochastic processes

"A statistical phenomenon that evolves in time according to probabilistic laws is called a stochastic process." (Box, George EP, et al. Time series analysis: forecasting and control.)

Stochastic processes

"A statistical phenomenon that evolves in time according to probabilistic laws is called a stochastic process." (Box, George EP, et al. Time series analysis: forecasting and control.)

Non-deterministic time series or statistical time series

A sample realization from an infinite population of time series that could have been generated by a stochastic process.

Stochastic processes

"A statistical phenomenon that evolves in time according to probabilistic laws is called a stochastic process." (Box, George EP, et al. Time series analysis: forecasting and control.)

Non-deterministic time series or statistical time series

A sample realization from an infinite population of time series that could have been generated by a stochastic process.

Probabilistic time series model

Let {X1,X2,...}{X1,X2,...} be a sequence of random variables. Then the joint distribution of the random vector [X1,X2,...,Xn]′ is

P[X1≤x1,X2≤x2,...,Xn≤xn]

where −∞<x1,...,xn<∞ and n=1,2,...

Mean function

Mean function

The mean function of {Xt} is

μX(t)=E(Xt).

Mean function

The mean function of {Xt} is

μX(t)=E(Xt).

Covariance function

The covariance function of {Xt} is

γX(r,s)=Cov(Xr,Xs)=E[(Xr−μX(r))(Xs−μX(s))]

for all integers r and s.

Mean function

The mean function of {Xt} is

μX(t)=E(Xt).

Covariance function

The covariance function of {Xt} is

γX(r,s)=Cov(Xr,Xs)=E[(Xr−μX(r))(Xs−μX(s))]

for all integers r and s.

The covariance function of {Xt} at lag h is defined by γX(h):=γX(h,0)=γ(t+h,t)=Cov(Xt+h,Xt).

Autocovariance function

The auto covariance function of Xt at lag h is

γX(h)=Cov(Xt+h,Xt).

Autocovariance function

The auto covariance function of Xt at lag h is

γX(h)=Cov(Xt+h,Xt).

Autocorrelation function

The autocorrelation function of Xt at lag h is

ρX(h)=γX(h)γX(0)=Cor(Xt+h,Xt).

Weekly stationary

A time series {Xt} is called weekly stationary if

μX(t) is independent of t.

γX(t+h,t) is independent of t for each h.

In other words the statistical properties of the time series (mean, variance, autocorrelation, etc.) do not depend on the time at which the series is observed, that is no trend or seasonality. However, a time series with cyclic behaviour (but with no trend or seasonality) is stationary.

Weekly stationary

A time series {Xt} is called weekly stationary if

μX(t) is independent of t.

γX(t+h,t) is independent of t for each h.

In other words the statistical properties of the time series (mean, variance, autocorrelation, etc.) do not depend on the time at which the series is observed, that is no trend or seasonality. However, a time series with cyclic behaviour (but with no trend or seasonality) is stationary.

Strict stationarity of a time series

A time series {Xt} is called weekly stationary if the random vector [X1,X2...,Xn]′ and [X1+h,X2+h...,Xn+h]′ have the same joint distribution for all integers h and n>0.

Simple time series models

1. iid noise

no trend or seasonal component

observations are independent and identically distributed (iid) random variables with zero mean.

Notation: {Xt}∼IID(0,σ2)

plays an important role as a building block for more complicated time series.

Joint distribution function of iid noise

P[X1≤x1,X2≤x2,...,Xn≤xn]=P[X1≤x1]....P[Xn≤xn].

Simple time series models

1. iid noise

no trend or seasonal component

observations are independent and identically distributed (iid) random variables with zero mean.

Notation: {Xt}∼IID(0,σ2)

plays an important role as a building block for more complicated time series.

Joint distribution function of iid noise

P[X1≤x1,X2≤x2,...,Xn≤xn]=P[X1≤x1]....P[Xn≤xn]. Let FX(.) be the cumulative distribution function of each of the identically distributed random variables X1,X2,... Then, P[X1≤x1,X2≤x2,...,Xn≤xn]=FX(x1)...FX(xn)

Simple time series models

1. iid noise

no trend or seasonal component

observations are independent and identically distributed (iid) random variables with zero mean.

Notation: {Xt}∼IID(0,σ2)

plays an important role as a building block for more complicated time series.

Joint distribution function of iid noise

P[X1≤x1,X2≤x2,...,Xn≤xn]=P[X1≤x1]....P[Xn≤xn]. Let FX(.) be the cumulative distribution function of each of the identically distributed random variables X1,X2,... Then, P[X1≤x1,X2≤x2,...,Xn≤xn]=FX(x1)...FX(xn) There is no dependence between observations. Hence, P[Xn+h≤x|X1=x1,...,Xn=xn]=P[Xn+h≤x].

iid noise (cont.)

Examples of iid noise

Sequence of iid random variables {Xt,t=1,2,...} with P(Xt=1)=12 and P(Xt=0)=12.

rnorm(15) [1] 2.61665472 -1.47748881 -0.75695121 -1.70520956 -1.02075354 0.81739417 [7] 0.01909737 -1.29025062 0.84205925 0.27636792 -1.37133390 -0.89830051[13] -0.62070173 0.15142641 0.40849453iid noise (cont.)

Examples of iid noise

Sequence of iid random variables {Xt,t=1,2,...} with P(Xt=1)=12 and P(Xt=0)=12.

rnorm(15) [1] 2.61665472 -1.47748881 -0.75695121 -1.70520956 -1.02075354 0.81739417 [7] 0.01909737 -1.29025062 0.84205925 0.27636792 -1.37133390 -0.89830051[13] -0.62070173 0.15142641 0.40849453Question

Let {Xt} is a iid noise with E(X2)=σ2<∞. Show that {Xt} is a stationary process.

Simple time series models

2. White noise

If {Xt} is a sequence of uncorrelated random variables, each with zero mean and variance σ2, then such a sequence is referred to as white noise.

Note: Every IID(0,σ2) sequence is WN(0,σ2) but not conversely.

Simple time series models

3. Random walk

A random walk process is obtained by cumulatively summing iid random variables. If {St,t=0,1,2,...} is a random walk process, then S0=0

S1=0+X1

S2=0+X1+X2

...

St=X1+X2+...+Xt.

Simple time series models

3. Random walk

A random walk process is obtained by cumulatively summing iid random variables. If {St,t=0,1,2,...} is a random walk process, then S0=0

S1=0+X1

S2=0+X1+X2

...

St=X1+X2+...+Xt.

Question

Is {St,t=0,1,2,...} a weak stationary process?

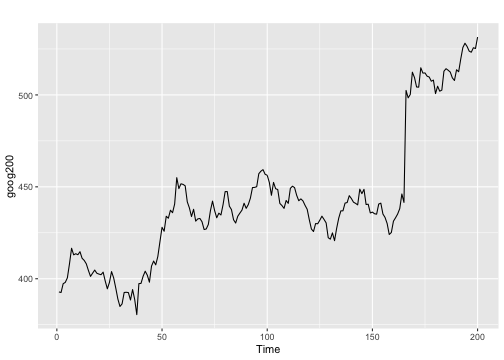

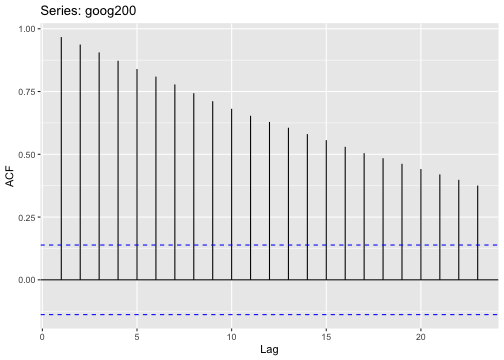

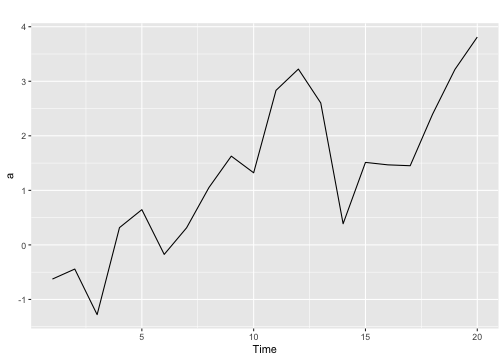

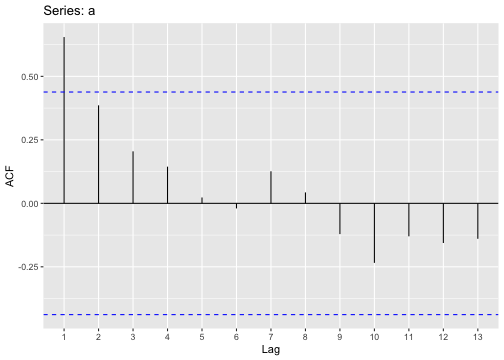

Identifying non-stationarity in the mean

Using time series plot

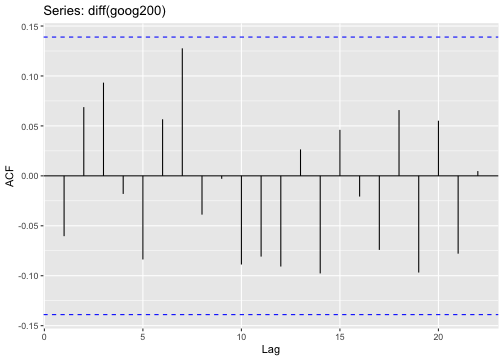

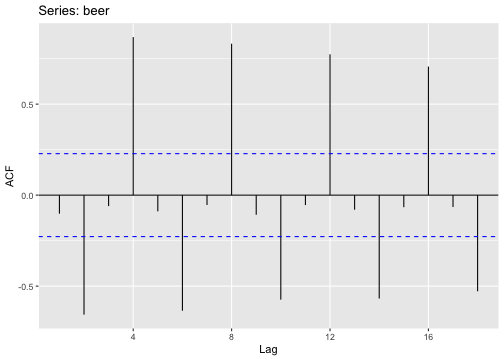

ACF plot

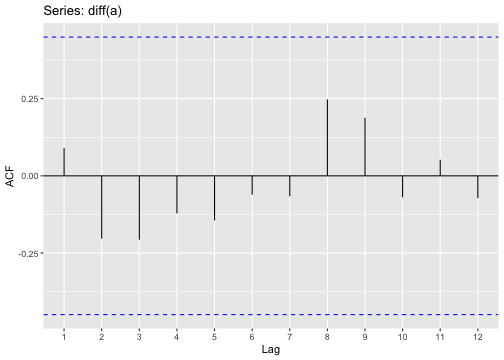

ACF of stationary time series will drop to relatively quickly.

The ACF of non-stationary series decreases slowly.

For non-stationary series, the ACF at lag 1 is often large and positive.

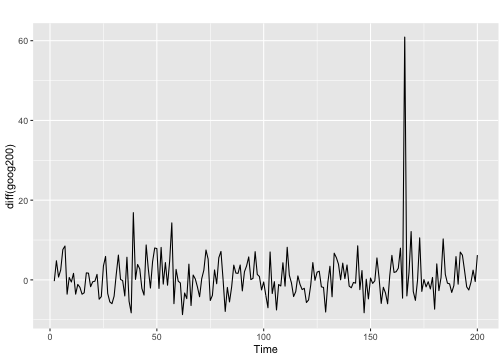

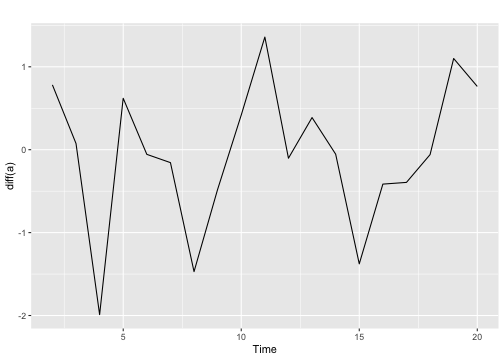

Elimination of Trend and Seasonality by Differencing

- Differencing helps to stabilize the mean.

Backshift notation:

BXt=Xt−1

Ordinary differencing

The first-order differencing can be defined as

∇Xt=Xt−Xt−1=Xt−BXt=(1−B)Xt where ∇=1−B.

The second-order differencing

∇2Xt=∇(∇Xt)=∇(Xt−Xt−1)=∇Xt−∇Xt−1=(Xt−Xt−1)−(Xt−1−Xt−2)

- In practice, we seldom need to go beyond second order differencing.

Seasonal differencing

- differencing between an observation and the corresponding observation from the previous year.

∇mXt=Xt−Xt−m=(1−Bm)Xt where m is the number of seasons. For monthly, m=12, for quarterly m=4.

For monthly series

∇12Xt=Xt−Xt−12

Twice-differenced series

∇212Xt=∇12Xt−∇12Xt−1=(Xt−Xt−12)−(Xt−1−Xt−13)

If seasonality is strong, the seasonal differencing should be done first.

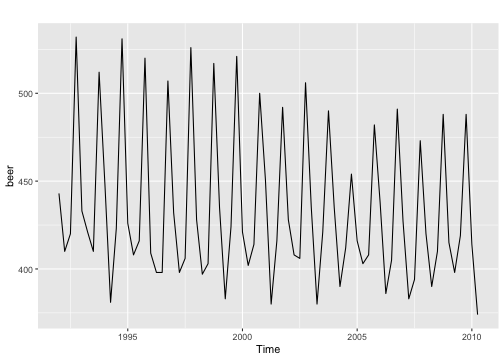

Original series

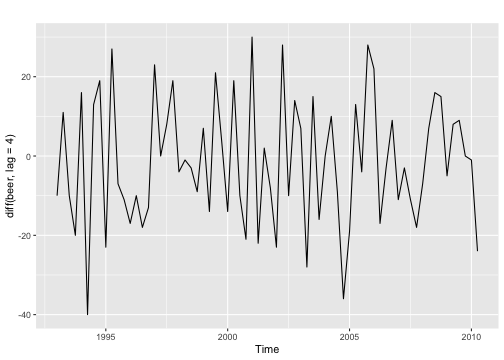

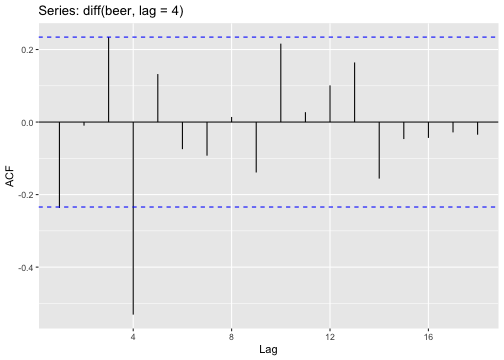

Differenced series

- For a stationary time series, the ACF will drop to zero relatively quickly, while the ACF of non-stationary data decreases slowly.

Original series

Differenced series

Original series

Seasonal Differencing

Linear filter model

A linear filter is an operation L which transform the white noise process into another time series {Xt}.

White noise ϵt → Linear Filter ψ(B) → Output Xt

Autoregressive models

current value = linear combination of past values + current error

An autoregressive model of order p, AR(P) model can be written as

xt=c+ϕ1xt−1+ϕ2xt−2+...+ϕpxt−p+ϵt, where ϵt is white noise.

- Similar to multiple linear regression model but with lagged values of xt as predictors.

Autoregressive models

current value = linear combination of past values + current error

An autoregressive model of order p, AR(P) model can be written as

xt=c+ϕ1xt−1+ϕ2xt−2+...+ϕpxt−p+ϵt, where ϵt is white noise.

- Similar to multiple linear regression model but with lagged values of xt as predictors.

Question

Show that AR(P) is a linear filter with transfer function ϕ−1(B), where ϕ(B)=1−ϕ1B−ϕ2B2−...−ϕpBp.

Autoregressive models

current value = linear combination of past values + current error

An autoregressive model of order p, AR(P) model can be written as

xt=c+ϕ1xt−1+ϕ2xt−2+...+ϕpxt−p+ϵt, where ϵt is white noise.

- Similar to multiple linear regression model but with lagged values of xt as predictors.

Question

Show that AR(P) is a linear filter with transfer function ϕ−1(B), where ϕ(B)=1−ϕ1B−ϕ2B2−...−ϕpBp.

Stationary condition for AR(P)

The roots of ϕ(B)=0 (characteristic equation) must lie outside the unit circle.

Stationarity Conditions for AR(1)

Let's consider AR(1) process,

xt=ϕ1xt−1+ϵt.

Then,

(1−ϕ1B)xt=ϵt.

This may be written as, xt=(1−ϕ1B)−1ϵt=∞∑j=0ϕj1ϵt−j. Hence,

ψ(B)=(1−ϕ1B)−1=∞∑j=0ϕj1Bj

If |ϕ1|<1, the AR(1) process is stationary.

This is equivalent to saying the roots of 1−ϕ1B=0 must lie outside the unit circle.

Geometric series

a+ar+ar2+ar3+...=a1−r

for |r|<1.

AR(1) process

xt=c+ϕ1xt−1+ϵt,

when ϕ1=0 - equivalent to white noise process

when ϕ1=0 and c=0 - random walk

when ϕ1=1 and c≠0 - random walk with drift

when ϕ1<0 - oscillates around the mean

Find the mean, variance and the autocorrelation function of a AR(1) process.

Help:

Cov(X+Y,X+Y)=Var(X+Y)=Var(X)+Var(Y)+2Cov(X,Y) Cov(X,Y)=E[(X−μX)(Y−μY)]

Question: Properties of AR(2) process

Find the Mean, Variance and Autocorrelation function.

Yule-Walker Equations

Autoregressive parameters in terms of the autocorrelations.

Autocorrelation function of a AR(P) process

The autocorrelation function of AR(P) process

ρk=ϕ1ρk−1+ϕ2ρk−2+...+ϕpρk−p for k>0.

This can be written as

ϕ(B)ρk=0, where ϕ(B)=1−ϕ1B−...−ϕpBp.

For AR(1) process

ρk=ϕ1ρk−1, for k>0. Since ρ0=1, for k≥1, we get

ρk=ϕk1

When ϕ1>0, autocorrelation function decays exponentially to zero.

When ϕ1<0, autocorrelation function decays exponentially to zero and oscillates in sign.

Partial Autocorrelation Function

Conditional autocorrelation between Xt and Xt−k given that Xt+1,Xt+2,...,Xt+k−1.

Cor(Xt,Xt+k|Xt+1,Xt+2,...,Xt+k−1)

The partial autocorrelation function ϕkk of AR(P) will be non-zero for k less than or equal to p and zero for k greater than p.

Calculations: in-class

Moving average models

Current value = linear combination of past forecast errors + current error

An MA(q) process can be written as

xt=c+ϵt+θ1ϵt−1+θ2ϵt−2+...+θpϵt−q where ϵt is white noise.

The current value xt can be thought of as a weighted moving average of the past few forecast errors.

MA(q) is always stationary.

Moving average models

Current value = linear combination of past forecast errors + current error

An MA(q) process can be written as

xt=c+ϵt+θ1ϵt−1+θ2ϵt−2+...+θpϵt−q where ϵt is white noise.

The current value xt can be thought of as a weighted moving average of the past few forecast errors.

MA(q) is always stationary.

- Any AR(p) model can be written as an MA(∞) model using repeated substitution.

Invertibility condition of an MA model

xt=c+ϵt+θ1ϵt−1+θ2ϵt−2+...+θpϵt−q Using back shift operator, xt=c+ϵt+θ1Bϵt+θ2B2ϵt+...+θpBqϵt

Hence,

xt=θq(B)ϵt+c.

The θq is defined as an MA operator.

Any MA(q) process can be written as an AR(∞) process if we impose some constraints on the MA parameters. Then it is called invertible.

MA model is called invertible if the roots of θq(B)=0 lie outside the unit circle.

MA(1) process

Obtain the invertibility condition of MA(1) process.

Calculate mean, variance, ACF and PACF of MA(1) process.

MA(2) process

Calculate mean, variance, ACF and PACF of MA(2) process.

ACF and PACF of AR(p) v MA(q) process

AR(p)

ACF: decays exponentially to zero.

PACF: PACF of AR(p) process is zero, beyond the order p of the process/ cut-off after lag p.

MA(q)

ACF: ACF of MA(q) process is zero, beyond the order q of the process/ cut-off after lag q.

PACF: decays exponentially to zero.

ARMA process

current value = linear combination of past values + linear combination of past error + current error

The ARMA(p,q) can be written as

Xt=c+ϕ1xt−1+ϕ2xt−2+...+ϕpxt−p+θ1ϵt−1+θ2ϵt−2+...+θqϵt−q+ϵt, where {ϵt} is a white noise process.

Using the back shift operator

ϕ(B)xt=θ(B)ϵt, where ϕ(.) and θ(.) are the pth and qth degree polynomials,

ϕ(B)=1−ϕ1ϵ−...−ϕpϵp, and θ(B)=1+θ1ϵ+...+θqϵq.

ARMA(p, q) process

Stationary condition

Roots of ϕ(B)=0 lie outside the unit circle.

Invertible condition

Roots of θ(B)=0 lie outside the unit circle.

Question:

Determine which of the following processes are invertible and stationary.

xt=0.6xt−1+ϵt

xt=ϵt−1.3ϵt−1+0.4ϵt−2

xt=0.6xt−1−1.3ϵt−1+0.4ϵt−2+ϵt

xt=xt−1−1.3ϵt−1+0.3ϵt−2+xt

ARMA(1, 1) model

Calculate the mean,variance and ACF of ARMA(1, 1) process.

Non-seasonal ARIMA models

- Autoregressive Integrated Moving Average Model

x′t=c+ϕ1x′t−1+...+ϕpx′t−p+θ1ϵt−1+...+θqϵt−q+ϵt, where x′t is the difference series.

Using the back shift notation

(1−ϕ1B−...−ϕpBp)(1−B)dxt=c+(1+θ1B+...+θqBq)ϵt

We call this an ARIMA(p,d,q) model.

p - order of the autoregressive part

d - degree of first differencing involved

q - order of the moving average part

Seasonal ARIMA model

Seasonal differencing

For monthly series

(1−B12)xt=xt−xt−12

For quarterly series

(1−B4)xt=xt−xt−4

Non-seasonal differencing

(1−B)xt=xt−xt−1

Multiply terms together

(1−B)(1−Bm)xt=(1−B−Bm+Bm+1)xt=xt−xt−1−xt−m+xt−m−1.

Seasonal ARIMA models

ARMA(p,d,q)(P,D,Q)m

(p,d,q) - non-seasonal part of the model

(P,D,Q) - seasonal part of the model

m - number of observations per year

ARIMA(1,1,1)(1,1,1)4

(1−ϕ1B)(1−Φ1B4)(1−B)(1−B4)xt=(1+θ1B)(1+Θ1B4)ϵt

(1−ϕ1B) - Non-seasonal AR(1)

(1−Φ1B4) - Seasonal AR(1)

(1−B) - Non-seasonal difference

(1−B4) - Deasonal difference

(1+θ1B) - Non-seasonal MA(1)

(1+ΘB4) - Seasonal MA(1)

Question

- Write down the model for

ARIMA(0,0,1)(0,0,1)12 ARIMA(1,0,0)(1,0,0)12